One of the most immediate and tangible benefits of AI in motion design is the automation of manual and repetitive tasks. This includes tasks such as rotoscoping, keyframing and object tracking, which are essential to any motion design project, but can take a significant amount of time and effort when done manually. AI can perform these tasks quickly and accurately, allowing us designers to spend more time on the creative aspects of our work.

As a motion designer, one of the most labour-intensive tasks is rotoscoping. In After Effects, this task can be semi-automated with the help of AI and a tool called Rotobrush. With a few brush strokes, the tool can 'recognise' the foreground object we want to keep, while removing the background frame by frame, quickly and accurately. More recently, other AI tools such as Runway ML have offered similar ways to remove background from footage.

Another task that is essentially the flip side of the first is removing an object from footage, which can now be easily achieved using generative fill in After Effects. What used to take hours of painstakingly removing the object frame by frame can now be done by exporting a keyframe from the sequence, painting over the object we want to remove in Photoshop, and then automatically propagating the change to the rest of the footage in After Effects. The recent addition of generative fill to Photoshop makes this task even easier, and other solutions are being developed, such as inpainting (the process of replacing part of an image using generated artwork) with stable diffusion within Photoshop.

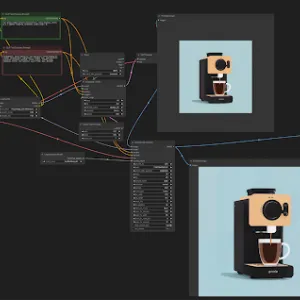

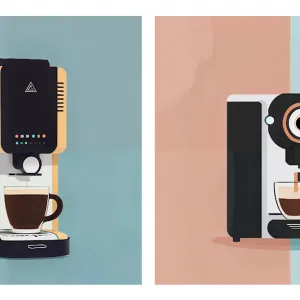

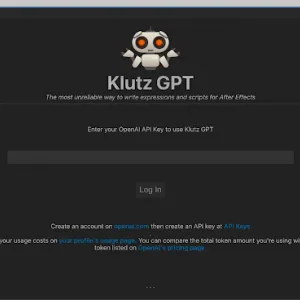

In a recent project, we used AI and chatGPT to build automated scripts for After Effects using the Expression coding language. This has allowed us to create custom scripts for specific actions, as well as smarter templates for our essential graphics workflow.